An Introduction to Neural Networks

As promised in the reCAPTCHA article, I want to talk about how artificial neural networks work. This information comes from a lecture that Cambridge PHD student Tomas Van Pottelbergh gave to some of my students last week, as well as an afternoon of reading books about AI.

You might remember learning about neurons in your brain from GCSE biology. One end of the neuron collects impulses from the other neurons around it and when some critical threshold is passed over the neuron fires, generating a brief surge of current which in turn may trigger other neurons. Gather together 84 billion of these and you form a human brain.

It is tempting to think of them like transistors in a circuit: taking inputs of 0s and 1s and outputting similarly. However that is a bit of a simplification which rules out some of the complication which allows for more complicated patterns. We’ll look at better models further down in the article but for now let's look at one of the simpler models for a neuron: integrate and fire.

Below we can see a graph of the charge of ions in a neuron which is having surges applied to it. After each shock the level of charge builds up and then slowly falls down again. However enough shocks in short succession will get it above the threshold for firing.

This model is simple enough that providing solutions only requires the solving of one differential equation. This is well within the realm of possibility for solving quickly enough to simulate networks of thousands of neurons. These neural networks can be used to learn all sorts of different tasks. For example there is a classic problem in engineering called The Cocktail Party Problem.

The idea is that at a noisy cocktail party humans are quite good at locking on to the voice of one person even though the noise is arriving at your ears from many different sources at different pitches, tempos and volumes. Your brain is just wired to concentrate on one stream and it relegated everything else to background (which it makes you aware of if your subconscious deems that it is important such as if it hears your name or a shocking word like a swear word). All of this is well tested and has been observed in even very young brains.

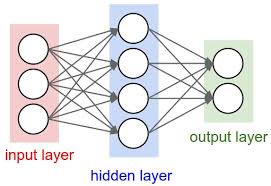

However classical computers have not been good at this task. Notice that it is the same problem as trying to separate out the various tracks in a piece of music, into vocals, drums etc. This is where neural networks come in. In 2015 a series of artificial neurons were set up in a random manner. The input layer receive inputs such as tempo in a particular time period/mean volume/overall shape of the sound waves etc. and each is set to trigger if some set of random parameters are met. They feed into the hidden layer which is just another chain of neurons. Each neuron takes in inputs from random other neurons and applies a weighting as to how much it cares about each neuron feeding into it and fires off at some random threshold. Eventually all of this leads to a layer of neurons which give the outputs that you can read. Notice that all of this is completely arbitrary: there is no programming to make it recognise anything in particular.

In the experiment the scientists fed in 50 songs 20 seconds at a time. They would receive some output which they hoped was the isolated vocal track, but for the most part the computer would not be successful with its random attempt. However the scientists would let the program iterate itself; strengthening connections which led to correct results and lowering the weighting on neurons that did not.

There were billions of parameters for the network to tinker with, but eventually the network had learned some way of working out what a voice sounds like compared with the backing music. How it does that is unclear to the humans looking on, but the AI has found some pattern to recognise. The team applied a further 13 songs which the neural network hadn't trained on and it was able to separate out the vocals without any further training. This is an example of machine learning.

All of this is with a simplified model of a neuron, but a better models exist. Being able to model the levels of each ion in the neuron over time leads to subtly different threshold levels. If you need some quota of potassium, sodium, calcium etc. to spike and each grows and falls then everything gets quite complicated. The next level up from Integrate and Fire is the Hodgkin-Huxley Model which simulates the levels of the most important two ions as well as whether there are spikes from other neurons and a catch all “other” category for ions. This leads to a much closer to reality model, but it leads to a system of 4 differential equations which has nonlinear behaviour. There are approximate solutions which involve using Fourier transforms, but as you can imagine this makes the time to simulate them much longer. Instead of tens of thousands of neurons we can only simulate much smaller networks. In some ways we are just waiting for computers to catch up.

Large projects do exist to create neural nets simulating the human brain (currently one in the EU, one in the US and a third in Japan), but simply connecting together enough artificial neurons isn't enough to gain anything useful. I gather these projects are controversial because they gather a lot of funding without clear aims for research. Van Pottelbergh was quite disparaging of such projects in the lecture and indicated that more could be gained from either finding better and better models for single neurons or by trying to find patterns in small neural networks. Simply throwing lots of neurons at a problem we have got to about the point of simulating one column of a rat’s brain, but it doesn't gain us much.

However the advances in machine learning through this process have been fantastic in recent years. Internet giants such as Facebook, Amazon and Google all use machine learning through neural networks to suggest purchases to you. Similarly Youtube’s algorithm for predicting the next video to watch is similar: these are all AIs in action. In a slightly less corporate shell we have AlphaGo which was a neural network based machine learner which won 4 out of 5 games against one of the top grandmasters in the game of Go last year. This game has over 10^720 different possibilities so it is well out of the realm of brute force and it was predicted that a computer would never beat a human right up until it happened.